The Subscription Scoop: Analyzing Subscription Box Reviews using GridDB and AI¶

Subscription Boxes are a rapidly growing segment in today's e-commerce landscape. They have become increasingly popular among both businesses and customers. For businesses, they help create recurring revenue models whereas for customers, they offer highly customized and often personalized experiences. Several e-commerce platforms enable niche players and established brands to sell subscription boxes. In this case study, we specifically explore the subscription boxes sold by various independent stores on Amazon. We will store the data in containers, specialized structures within GridDB that allow for high-performance data ingestion. The highly scalable nature of containers makes them suitable for scenarios where data is continuously generated, such as customer reviews of subscription box services.

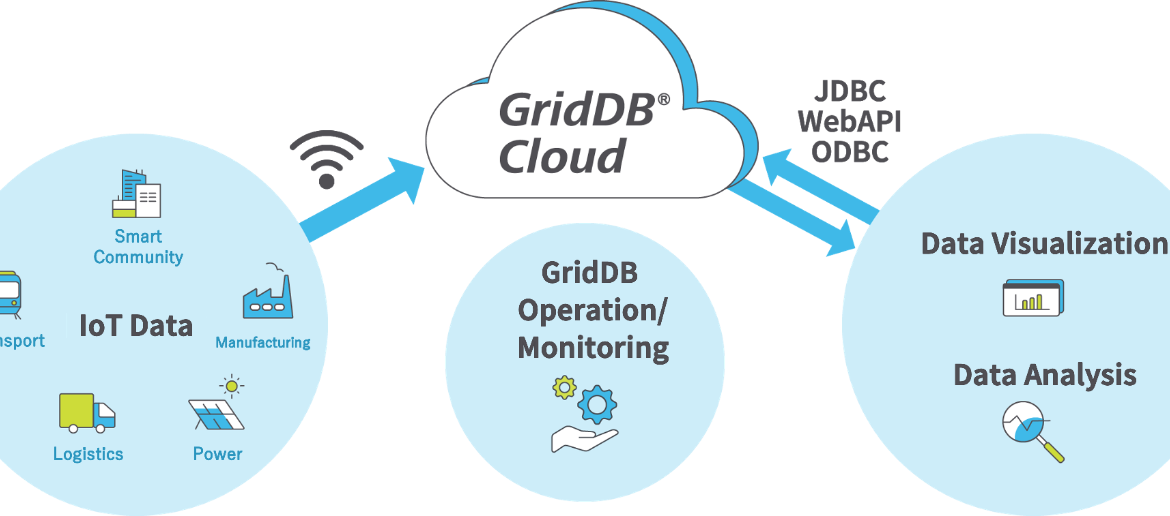

The data we use for this analysis is sourced from the official website of the University of California San Diego. There are two ways to download the dataset 1. Manually 2. Programmatically using Python. To download it manually, use the URL - https://amazon-reviews-2023.github.io/ and access the section 'Grouped by Category'. Then, select 'Subscription Boxes'. Download the 'Reviews' and the 'Metadata' (highlighted in yellow in the image below). In this article, we use option 2 to download the datasets programmatically using the 'requests' library. The reviews and metadata datasets are then joined to get a combined dataframe which in turn, is loaded to the GridDB cloud (using the Web API). We then use GridDB SQL to query the data and gather insights. We also leverage the power of AI- the popular Hugging Face Library to analyze customer reviews and determine stores where the sentiments are either overwhelmingly positive or negative. We use pre-trained sentiment analysis models from Hugging Face to efficiently assess customer feedback and gain valuable insights into which subscription boxes are highly rated or need improvement.

Dataset Citation¶

Rappaz, J., McAuley, J., & Aberer, K. (2021). Recommendation on live-streaming platforms: Dynamic availability and repeat consumption. Proceedings of the 15th ACM Conference on Recommender Systems (RecSys). https://cseweb.ucsd.edu/~jmcauley/datasets.html#amazon_reviews

Image(filename='GridDB Dataset Screenshot.png',width=800, height=200)

Importing Libraries¶

import os

import pandas as pd

import requests

from IPython.display import Image

import base64 # for utf-8 encoding

import gzip # to handle .gz file extensions

import json

import http.client

import textwrap

import logging # To suppress the logging for requests to avoid seeing the send/reply details

import plotly.express as px

import plotly.graph_objects as go

from transformers import AutoTokenizer, pipeline

from tqdm import tqdm # Import tqdm for progress bar

# Enable debugging output

http.client.HTTPConnection.debuglevel = 1

Configuring Database Users in GridDB for querying¶

Image(filename='GridDBCloud4.png',width=500, height=200)

Creating the Database User Credentials¶

GridDB has two types of passwords - Portal User Credentials and Database User Credentials. When you sign up with GridDB cloud, you will be assigned a set of credentials to be able to access the GridDB portal. You can change the provided password. These are the portal user credentials. Once you are in the portal, you can add yourself as a database user and set your credentials after assigning permissions to the database. Note that this also involves whitelisting your IP Address.

Image(filename='GridDBCloud-1-db_user.png',width=500, height=200)

Once the database user is created, click on the 'Database' icon to access the cluster and corresponding databases within the cluster.

Once this is done, click on the Database User name as shown below.

Image(filename='GridDBCloud2.png',width=500, height=200)

Give the necessary permissions to the database resource as shown below.

Image(filename='GridDBCloud3.png',width=500, height=200)

Loading the Datasets as Python DataFrames¶

As mentioned in the Introduction, we use the programmatic way to download the files JSON datasets into a Python Dataframe. For this, we use the 'requests' and the 'gzip' library.

Downloading the reviews¶

# URL of the .jsonl.gz file

url = "https://datarepo.eng.ucsd.edu/mcauley_group/data/amazon_2023/raw/review_categories/Subscription_Boxes.jsonl.gz"

# Download the file

response = requests.get(url, stream=True)

if response.status_code == 200:

# Save the file as a .gz file

with open("dataset1.jsonl.gz", "wb") as file:

for chunk in response.iter_content(chunk_size=1024):

file.write(chunk)

print("File downloaded and saved as dataset1.jsonl.gz")

else:

print(f"Failed to download file. Status code: {response.status_code}")

# Read and process the .jsonl.gz file

data = []

with gzip.open("dataset1.jsonl.gz", "rt", encoding="utf-8") as gz_file:

for line in gz_file:

# Parse each line as JSON

data.append(json.loads(line))

send: b'GET /mcauley_group/data/amazon_2023/raw/review_categories/Subscription_Boxes.jsonl.gz HTTP/1.1\r\nHost: datarepo.eng.ucsd.edu\r\nUser-Agent: python-requests/2.32.2\r\nAccept-Encoding: gzip, deflate, br, zstd\r\nAccept: */*\r\nConnection: keep-alive\r\n\r\n' reply: 'HTTP/1.1 200 OK\r\n' header: Date: Sat, 18 Jan 2025 18:45:02 GMT header: Server: Apache/2.4.29 (Ubuntu) header: Last-Modified: Mon, 26 Feb 2024 20:33:58 GMT header: ETag: "29c073-6124ed44c058d" header: Accept-Ranges: bytes header: Content-Length: 2736243 header: Keep-Alive: timeout=5, max=100 header: Connection: Keep-Alive header: Content-Type: application/x-gzip File downloaded and saved as dataset1.jsonl.gz

subscription_boxes_reviews_df = pd.DataFrame(data)

subscription_boxes_reviews_df.columns

Index(['rating', 'title', 'text', 'images', 'asin', 'parent_asin', 'user_id',

'timestamp', 'helpful_vote', 'verified_purchase'],

dtype='object')

Downloading the Metadata¶

# URL of the .jsonl.gz file

url = "https://datarepo.eng.ucsd.edu/mcauley_group/data/amazon_2023/raw/meta_categories/meta_Subscription_Boxes.jsonl.gz"

# Download the file

response = requests.get(url, stream=True)

if response.status_code == 200:

# Save the file as a .gz file

with open("dataset2.jsonl.gz", "wb") as file:

for chunk in response.iter_content(chunk_size=1024):

file.write(chunk)

print("File downloaded and saved as dataset2.jsonl.gz")

else:

print(f"Failed to download file. Status code: {response.status_code}")

# Read and process the .jsonl.gz file

metadata = []

with gzip.open("dataset2.jsonl.gz", "rt", encoding="utf-8") as gz_file:

for line in gz_file:

# Parse each line as JSON

metadata.append(json.loads(line))

send: b'GET /mcauley_group/data/amazon_2023/raw/meta_categories/meta_Subscription_Boxes.jsonl.gz HTTP/1.1\r\nHost: datarepo.eng.ucsd.edu\r\nUser-Agent: python-requests/2.32.2\r\nAccept-Encoding: gzip, deflate, br, zstd\r\nAccept: */*\r\nConnection: keep-alive\r\n\r\n' reply: 'HTTP/1.1 200 OK\r\n' header: Date: Sat, 18 Jan 2025 18:45:33 GMT header: Server: Apache/2.4.29 (Ubuntu) header: Last-Modified: Mon, 26 Feb 2024 19:00:46 GMT header: ETag: "45b79-6124d86ffdb2f" header: Accept-Ranges: bytes header: Content-Length: 285561 header: Keep-Alive: timeout=5, max=100 header: Connection: Keep-Alive header: Content-Type: application/x-gzip File downloaded and saved as dataset2.jsonl.gz

subscription_boxes_metadata_df = pd.DataFrame(metadata)

subscription_boxes_combined_df = subscription_boxes_reviews_df.merge(subscription_boxes_metadata_df, on="parent_asin", how="inner")

#theData = "{\"rows\":" + request_body_subscription_boxes_json + "}"

# Removing unnecessary columns

subscription_boxes_combined_df = subscription_boxes_combined_df.drop(columns=['images_x','images_y'])

Encoding Credentials for GridDB¶

While connecting to GridDB cloud, the Request that is constructed should have the username and password encoded to base64. To determine the base64 encoded credentials for your username and password, you can use a tool such as https://www.base64encode.org/ or the base64 library in python. GridDB prefers the 'basic authentication' format - "Basic username:password". Refer to the GridDB resource to learn more.

username = "XXXX" # Replace with your username

password = "YYYYY" # Replace with your password

credentials = f"{username}:{password}"

encoded_credentials = base64.b64encode(credentials.encode()).decode()

print(f"Encoded credentials: Basic {encoded_credentials}")

#Construct an object to hold the request headers (ensure that you replace the XXX placeholder with the correct value that matches the credentials for your GridDB instance)

#header_obj = {"Authorization":"Basic dXNlck0wMWt3VzE0Y3Q6Q1BYZ2liNzg4","Content-Type":"application/json; charset=UTF-8","User-Agent":"PostmanRuntime/7.29.0"}

header_obj = {

"Authorization": f"Basic {encoded_credentials}", # Add encoded credentials here

"Content-Type": "application/json", # Optional; depends on API requirements

"charset": "UTF-8",

"User-Agent":"PostmanRuntime/7.29.0"

}

#Construct the base URL based on your GridDB cluster you'd like to connect to (ensure that you replace the placeholders in the URL below with the correct values that correspond to your GridDB instance)

base_url = 'https://[host]:[port]/griddb/v2/[clustername]/dbs/[database_name]/'

data_obj = {

"container_name": "Amazon_subscription_boxes",

"container_type": "COLLECTION",

"rowkey": False,

"columns": [

{"name": "rating", "type": "DOUBLE"},

{"name": "title_x", "type": "STRING"},

{"name": "text", "type": "STRING"},

{"name": "asin", "type": "STRING"},

{"name": "parent_asin", "type": "STRING"},

{"name": "user_id", "type": "STRING"},

{"name": "timestamp", "type": "LONG"},

{"name": "helpful_vote", "type": "INTEGER"},

{"name": "verified_purchase", "type": "BOOL"},

{"name": "main_category", "type": "STRING"},

{"name": "title_y", "type": "STRING"},

{"name": "average_rating", "type": "DOUBLE"},

{"name": "rating_number", "type": "INTEGER"},

{"name": "features", "type": "STRING"},

{"name": "description", "type": "STRING"},

{"name": "price", "type": "DOUBLE"},

{"name": "videos", "type": "STRING"},

{"name": "store", "type": "STRING"},

{"name": "categories", "type": "STRING"},

{"name": "details", "type": "STRING"},

{"name": "bought_together", "type": "STRING"}

]

}

#Set up the GridDB WebAPI URL

url = base_url + 'containers'

#Invoke the GridDB WebAPI with the headers and the request body

x = requests.post(url, json = data_obj, headers = header_obj)

Loading the Container (Row Registration)¶

Row Registration is the process of inserting rows into the newly created container. It is similar to the INSERT SQL DDL task in standard relational databases. In GridDB, row registration is performed by passing rows as a JSON object while using the WebAPI. Rows are denoted in the form of JSON objects whereas column names and data are denoted as key-value pairs. Row Registration can be done as single rows or in batches.

# Replace empty lists with empty strings or None

subscription_boxes_combined_df = subscription_boxes_combined_df.applymap(

lambda x: "" if isinstance(x, list) and not x else x

)

# Check for problematic values in DOUBLE columns

double_columns = ["average_rating", "price"]

# Replace invalid or missing values with 0.0

for col in double_columns:

subscription_boxes_combined_df[col] = pd.to_numeric(subscription_boxes_combined_df[col], errors='coerce').fillna(0.0)

C:\Users\mg_su\AppData\Local\Temp\ipykernel_15956\3612489214.py:2: FutureWarning: DataFrame.applymap has been deprecated. Use DataFrame.map instead. subscription_boxes_combined_df = subscription_boxes_combined_df.applymap(

# Ensure the DataFrame columns match the schema order for GridDB

column_order = [

"rating", "title_x", "text", "asin", "parent_asin", "user_id",

"timestamp", "helpful_vote", "verified_purchase",

"main_category", "title_y", "average_rating", "rating_number",

"features", "description", "price", "videos", "store",

"categories", "details", "bought_together"

]

# Reorder columns in the DataFrame

subscription_boxes_combined_df = subscription_boxes_combined_df[column_order]

# Convert any non-string fields to JSON strings if required

json_fields = ["features", "videos", "categories", "details"]

for col in json_fields:

subscription_boxes_combined_df[col] = subscription_boxes_combined_df[col].apply(

lambda x: json.dumps(x) if isinstance(x, (list, dict)) else x

)

subscription_boxes_combined_df['average_rating'] = subscription_boxes_combined_df['average_rating'].astype(float)

subscription_boxes_combined_df['rating'] = subscription_boxes_combined_df['rating'].astype(float)

subscription_boxes_combined_df['price'] = subscription_boxes_combined_df['price'].astype(float)

# Parse the JSON string into a Python list of dictionaries

request_body_subscription_boxes = subscription_boxes_combined_df.to_json(orient='values')

#Setup the URL to be used to invoke the GridDB WebAPI to register rows in the container created previously

url = base_url + 'containers/Amazon_subscription_boxes/rows'

#Invoke the GridDB WebAPI using the request constructed

x = requests.put(url, data=request_body_subscription_boxes, headers=header_obj)

Analysis using GridDB SQL¶

Top 10 subscription boxes by Average Ratings and Popularity¶

Though not a standard relational database, GridDB supports subqueries and aggregations similar to relational databases. This makes it a hybrid of relational databases and NoSQL databases. Refer to this resource to learn more on the SELECT clause in GridDB. To learn about subqueries, refer to this resource.

sql_query1 = (f"""

SELECT *

FROM (

SELECT

store,

MAX(average_rating) AS Avg_User_Rating,

SUM(rating_number) AS Total_Ratings

FROM

Amazon_subscription_boxes

GROUP BY

store

ORDER BY

Avg_User_Rating DESC,

Total_Ratings DESC

LIMIT 10

) AS Top_Stores

ORDER BY Total_Ratings DESC;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query1

}

])

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req1 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req1.json()

top_rated_subscription_boxes = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"],myJson[0]["columns"][2]["name"]])

#top_rated_subscription_boxes.style.hide(axis="index")

# Create a bar chart

fig = px.bar(

top_rated_subscription_boxes,

x='store',

y='Total_Ratings',

title='Top Rated Subscription Boxes by Total Ratings',

text='Total_Ratings',

labels={'store': 'Store', 'Total_Ratings': 'Total Ratings'}

)

# Customize layout

fig.update_traces(textposition='outside')

fig.update_layout(

xaxis_title='Store',

yaxis_title='Total Ratings',

xaxis_tickangle=-45,

title_x=0.5

)

# Display the chart

fig.show()

Insights: Little Dreamers Club and Annie's have been the most rated subscription boxes on Amazon as of 2023.

Stores with Unverified Purchases: Analyzing Trustworthiness¶

GridDB supports standard aggregations like SUM, COUNT, ROUND, AVERAGE, and so on. The list of Aggregation functions supported in GridDB can be found in this resource.

sql_query2 = (f"""

SELECT

store,

COUNT(*) AS total_reviews,

SUM(CASE WHEN verified_purchase THEN 1 ELSE 0 END) AS verified_reviews,

SUM(CASE WHEN NOT verified_purchase THEN 1 ELSE 0 END) AS unverified_reviews,

ROUND(SUM(CASE WHEN verified_purchase THEN 1 ELSE 0 END) * 100.0 / COUNT(*), 2) AS verified_percentage,

ROUND(SUM(CASE WHEN NOT verified_purchase THEN 1 ELSE 0 END) * 100.0 / COUNT(*), 2) AS unverified_percentage

FROM

Amazon_subscription_boxes

GROUP BY

store

HAVING

ROUND(SUM(CASE WHEN NOT verified_purchase THEN 1 ELSE 0 END) * 100.0 / COUNT(*), 2) >

ROUND(SUM(CASE WHEN verified_purchase THEN 1 ELSE 0 END) * 100.0 / COUNT(*), 2)

ORDER BY

unverified_reviews DESC

LIMIT 10;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query2

}

])

# Validate the constructed request body

#print(request_body)

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req2 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req2.json()

verified_unverified_reviews = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"],myJson[0]["columns"][2]["name"],myJson[0]["columns"][3]["name"],myJson[0]["columns"][4]["name"],myJson[0]["columns"][5]["name"]])

import plotly.graph_objects as go

# Data from the DataFrame

stores = verified_unverified_reviews["store"]

verified_reviews = verified_unverified_reviews["verified_reviews"]

unverified_reviews = verified_unverified_reviews["unverified_reviews"]

# Create the figure

fig = go.Figure()

# Add verified reviews as the first trace with numbers

fig.add_trace(go.Bar(

y=stores,

x=verified_reviews,

name="Verified Reviews",

orientation="h", # Horizontal orientation

marker=dict(color="green"),

text=verified_reviews, # Display numbers

textposition="auto" # Automatically adjust position for better visibility

))

# Add unverified reviews as the second trace with numbers

fig.add_trace(go.Bar(

y=stores,

x=unverified_reviews,

name="Unverified Reviews",

orientation="h", # Horizontal orientation

marker=dict(color="orange"),

text=unverified_reviews, # Display numbers

textposition="auto" # Automatically adjust position for better visibility

))

# Update layout for better appearance

fig.update_layout(

title="Distribution of Verified and Unverified Reviews by Store",

barmode="stack", # Stacked bar mode

xaxis_title="Number of Reviews",

yaxis_title="Store",

legend_title="Review Type",

template="plotly_white", # Optional: A clean white background

uniformtext_minsize=8, # Ensure text is readable

uniformtext_mode="show", # Force display of text, even in tight spaces

xaxis=dict(tickangle=0) # Ensure x-axis ticks are easy to read

)

# Show the chart

fig.show()

Insight(s): There are a few stores where unverified reviews are more than verified reviews. While this raises potential credibility concerns, it may also denote organic popularity wherein users who bought the product outside the platform end up posting a review.

Top 10 Verified Reviews that were upvoted¶

sql_query3 = (f"""

SELECT

store,

text,

COUNT(helpful_vote) AS helpful_votes

FROM

Amazon_subscription_boxes

WHERE verified_purchase = True

GROUP BY

store

ORDER BY

3 DESC

LIMIT 10;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query3

}

])

# Validate the constructed request body

#print(request_body)

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req3 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req3.json()

helpful_votes = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"],myJson[0]["columns"][2]["name"]])

# Define a function to wrap text

def wrap_text(text, width=50):

return "<br>".join(textwrap.wrap(text, width=width))

# Apply wrapping to the 'text' column

helpful_votes['text_wrapped'] = helpful_votes['text'].apply(lambda x: wrap_text(x, width=40))

# Select specific columns for display

subset_df = helpful_votes[['store', 'text_wrapped', 'helpful_votes']]

# Use Styler to display with gridlines and wrapped text

styled_df = subset_df.style.set_table_styles([

{'selector': 'table', 'props': [('border-collapse', 'collapse'), ('width', '100%')]},

{'selector': 'th, td', 'props': [('border', '1px solid black'), ('padding', '8px')]}

])

# Render the DataFrame with gridlines and no index

styled_df = styled_df.hide(axis='index')

# Display the styled DataFrame

styled_df

| store | text_wrapped | helpful_votes |

|---|---|---|

| Allure Beauty Box | Avid fan of Allure Mag, been reading for at Least 10 yrs so readily excitedly joined PROS:::: cheap convenient way to try new Beauty/skin products Fast delivery />CONS::: None of products I received were What was depicted in the graphic. I contacted seller, asking if what was sent monthly was same as shown in graphic and received a puzzling reply ((smh)) scented sample was strong & harsh No lipstick Was hoping for at least tube of lipstick, gloss, etc />Received a notice that price increases with Ictober shipment. MAIN reason I subscribed was because of low cost to try new Skin products. Am going to wait for next shipment before I decide to continue or unsubscribe |

1686 |

| Funko | Always good products. Very exciting experience each time. |

1576 |

| STEM Club | I got 5 to 6 subscriptions so far and most are good. The only thing is sometimes the boxes are not age appropriate. |

1377 |

| KitNipBox | Absolutely useless nonsense and a complete waste of money. Kitty didn't like any of the items |

732 |

| Barkbox | Two SMALL stuffed animals and 2 little bags of treats for $35- nope nope nope. I’ll just be shopping a la cart on Amazon from now on |

391 |

| TheraBox | Some of the items are nice, but I am not interested in about half of them. This might be a nice idea as a gift but would be even better if you had a little bit of control over what you receive. |

337 |

| COCOTIQUE | I loved every thing and could use it all. Things were in it that I would never order Coctique |

325 |

| Simple Loose Leaf Tea Company | I was going to get the first box, which is a great value, and then cancel. But I have changed my mind. The flavor choices were Great and it is a fair amount of tea for the price. |

258 |

| FACETORY | Ok | 249 |

| Bokksu | I DID ENJOY THE ADVENTURE OF THE BOX AND BEING INTRODUCED TO NEW THINGS. BUT THE SNACKS THEMSELVES WERE JUST OK. THERE WERE TOO MAY THINGS THAT WERE SIMILAR FOR THE MONTH OF JULY, LIKE TOO MANY TYPES OF WHORCHEWTISHIRE RICE CRACKERS. A COUPLE OF THOSE WOULD HAVE SUFFICED. THE HAND MADE FIZZING CANDIES WERE INTERESTING AND THE STRAWBERRY MOUSSE LAYERED WAFFLE COOKIES IN THE SHAPE OF A FISH WERE EXCELLENT. I WANTED MORE DESCRIPTION ON THE INFORMATIONAL POSTCARD, LIKE WHY WERE THESE ITEMS PICKED?? I DID NOT RECOGNIZE A THEME. |

226 |

Total Product Variations¶

In the below query, we look at the number of variations (child ASINs) of a parent ASIN. When a product has variations, it means that the product is available in different versions, options, or configurations under the same overarching parent. This concept is commonly used in e-commerce platforms, including Amazon, to organize and display related products efficiently.

sql_query4 = (f"""

SELECT

parent_asin,

store,

COUNT(DISTINCT asin) AS total_variations

FROM Amazon_subscription_boxes

GROUP BY

parent_asin,store

ORDER BY

total_variations DESC

LIMIT 10;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query4

}

])

# Validate the constructed request body

#print(request_body)

# Disable debug output after running the query

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req4 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req4.json()

Product_Variations = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"],myJson[0]["columns"][2]["name"]])

Product_Variations.style.hide(axis="index")

| parent_asin | store | total_variations |

|---|---|---|

| B084ZSS5H1 | Prime Accessories HQ | 24 |

| B07QWTTPK7 | STEM Discovery Boxes | 20 |

| B0992LL6TG | JARMABOX | 18 |

| B08SMCV47C | ERIKA'S TEA ROOM MEMORIES MADE WITH EVERY CUP! | 17 |

| B07L9GL97H | Geek Fuel EXP | 16 |

| B08W23KDFR | KIDS READ DAILY | 16 |

| B08KWLQD46 | Annie's | 15 |

| B09R2MXZHC | Funko | 14 |

| B0BCGQ8SJR | The Dally Grind | 13 |

| B0BM57HJXV | Funko | 12 |

Insight(s): We see that Prime Accessories HQ and STEM Discovery Boxes have the highest number of variations (Child ASINS). This indicates that these products have a vast selection of products with features that can be customized or personalized.

Top 10 Subscription boxes with the Highest Rating¶

sql_query5 = (f"""

SELECT

store,

COUNT(rating) AS total_ratings

FROM Amazon_subscription_boxes

WHERE rating >= 4

GROUP BY

store

ORDER BY

total_ratings DESC

LIMIT 10;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query5

}

])

# Validate the constructed request body

#print(request_body)

# Disable debug output after running the query

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req5 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req5.json()

top_rated_products = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"]])

#top_rated_products.style.hide(axis="index")

#fig = ff.create_table(top_rated_products)

fig = px.bar(top_rated_products, x="store", y="total_ratings", orientation='v',text="total_ratings",title='Top 10 Subscription boxes with the Highest Rating')

fig.update_traces(textposition='inside')

fig.show()

Analyzing Sentiments based on Customer Reviews¶

sql_query6 = (f"""

SELECT

store,

text

FROM Amazon_subscription_boxes

WHERE (rating = 5 OR rating =1)

AND verified_purchase = True;

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query6

}

])

# Validate the constructed request body

#print(request_body)

# Disable debug output after running the query

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req6 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req6.json()

product_features = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"]])

#product_features

def truncate_review(review, max_length=256):

return review[:max_length]

product_features['text'] = product_features['text'].apply(truncate_review)

# Load the tokenizer and sentiment-analysis pipeline (with GPU support)

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased-finetuned-sst-2-english")

sentiment_analyzer = pipeline("sentiment-analysis", model="distilbert-base-uncased-finetuned-sst-2-english", device=-1) # Use CPU

# Define a function to truncate text to 512 tokens and tokenize in batches

def batch_tokenize(text_list, tokenizer, batch_size=64):

tokenized_batches = []

for i in range(0, len(text_list), batch_size):

batch = text_list[i:i + batch_size]

tokenized_batch = tokenizer(batch, padding=True, truncation=True, max_length=512, return_tensors="pt")

tokenized_batches.append(tokenized_batch)

return tokenized_batches

# Truncate and tokenize the 'features' column in batches

tokenized_batches = batch_tokenize(product_features['text'].tolist(), tokenizer)

# Perform sentiment analysis in batches (faster with GPU) with progress bar

results = []

for i, batch in tqdm(enumerate(tokenized_batches), total=len(tokenized_batches), desc="Processing batches"):

# Perform inference using the model

outputs = sentiment_analyzer.model(**batch)

predicted_classes = outputs.logits.argmax(dim=1) # Get predicted class indices (0 or 1 for the batch)

sentiments = ['POSITIVE' if cls == 1 else 'NEGATIVE' for cls in predicted_classes] # Map indices to sentiments

results.extend(sentiments) # Append all sentiments for the batch

# Map results back to the DataFrame

product_features['sentiment'] = results

# Summarize sentiments by store

sentiment_summary_df = product_features.groupby('store')['sentiment'].value_counts().unstack(fill_value=0)

sentiment_summary_df = sentiment_summary_df.rename(columns={'POSITIVE': 'positive_reviews', 'NEGATIVE': 'negative_reviews'}).reset_index()

# Show the final sentiment summary

#print(sentiment_summary_df)

Processing batches: 100%|████████████████████████████████████████████████████████████| 154/154 [07:21<00:00, 2.87s/it]

# Filter stores with more negative reviews than positive reviews

negative_dominant = sentiment_summary_df[sentiment_summary_df["negative_reviews"] > sentiment_summary_df["positive_reviews"]]

negative_dominant = negative_dominant.fillna(0) # Ensure no missing values

top_negative_dominant = negative_dominant.sort_values(by="negative_reviews", ascending=False).head(20)

# Filter stores with more positive reviews than negative reviews

positive_dominant = sentiment_summary_df[sentiment_summary_df["positive_reviews"] > sentiment_summary_df["negative_reviews"]]

positive_dominant = positive_dominant.fillna(0) # Ensure no missing values

top_positive_dominant = positive_dominant.sort_values(by="positive_reviews", ascending=False).head(20)

# Create stacked bar chart for stores with more negative reviews

fig_negative_dominant = go.Figure()

fig_negative_dominant.add_trace(go.Bar(

x=top_negative_dominant['store'],

y=top_negative_dominant['negative_reviews'],

name='Negative Reviews',

marker_color='crimson' # Red for negative sentiment

))

fig_negative_dominant.add_trace(go.Bar(

x=top_negative_dominant['store'],

y=top_negative_dominant['positive_reviews'],

name='Positive Reviews',

marker_color='green' # Green for positive sentiment

))

fig_negative_dominant.update_layout(

barmode='stack',

title='Top 20 Stores with More Negative Reviews than Positive Reviews',

xaxis_title='Store',

yaxis_title='Number of Reviews',

title_font_size=18,

xaxis_tickangle=-45,

legend_title_text='Review Type'

)

# Create stacked bar chart for stores with more positive reviews

fig_positive_dominant = go.Figure()

fig_positive_dominant.add_trace(go.Bar(

x=top_positive_dominant['store'],

y=top_positive_dominant['positive_reviews'],

name='Positive Reviews',

marker_color='green' # Green for positive sentiment

))

fig_positive_dominant.add_trace(go.Bar(

x=top_positive_dominant['store'],

y=top_positive_dominant['negative_reviews'],

name='Negative Reviews',

marker_color='crimson' # Red for negative sentiment

))

fig_positive_dominant.update_layout(

barmode='stack',

title='Top 20 Stores with More Positive Reviews than Negative Reviews',

xaxis_title='Store',

yaxis_title='Number of Reviews',

title_font_size=18,

xaxis_tickangle=-45,

legend_title_text='Review Type'

)

# Show both charts

fig_negative_dominant.show()

fig_positive_dominant.show()

Concluding Remarks¶

In this case study, we analyzed subscription box reviews and metadata using GridDB's powerful query engine. The ability to write SQL-like queries combined with faster retrieval times than traditional databases makes GridDB the perfect choice for use cases involving real-time processing. With the advent of AI, tools like Hugging Face can help analyze large volumes of data stored in GridDB to derive meaningful insights. Adoption of GridDB and AI technologies can greatly enhance value creation in businesses and unlock opportunities for innovation and growth in today's digital age.

If you have any questions about the blog, please create a Stack Overflow post here https://stackoverflow.com/questions/ask?tags=griddb .

Make sure that you use the “griddb” tag so our engineers can quickly reply to your questions.